Difference between revisions of "Quality Assurance Initiative"

| Line 77: | Line 77: | ||

** Arrange: create the data and objects needed to perform the tested operation; | ** Arrange: create the data and objects needed to perform the tested operation; | ||

** Act: run the tested operation; | ** Act: run the tested operation; | ||

** Assert: use EXPECT_ and ASSERT_ macros from GTest to check that the behaviour is as expected. | ** Assert: use <code>EXPECT_*</code> and <code>ASSERT_*</code> macros from GTest to check that the behaviour is as expected. | ||

* Remember to test unexpected and corner cases, invalid input, fallback behaviours. | * Remember to test unexpected and corner cases, invalid input, fallback behaviours. | ||

* If your test needs custom set-up and tear-down, use a custom test fixture and TEST_F macros. | * If your test needs custom set-up and tear-down, use a custom test fixture and <code>TEST_F</code> macros. | ||

* If your test needs to run on several cases parametrized by input data, consider using a TEST_P fixture. | * If your test needs to run on several cases parametrized by input data, consider using a <code>TEST_P</code> fixture. | ||

Revision as of 17:55, 17 December 2023

|

This page is a stub. You can help Inkscape Wiki by expanding it |

This page is a work in progress (2023-12-16).

The Quality Assurance Initiative aims to improve the quality and stability of Inkscape and to reduce the number of bugs and regressions, especially severe ones.

In a nutshell, the goal is to have more of:

- Test coverage of source code (unit tests, component/integration tests, end-to-end tests);

- Test automation, developer-friendly testing frameworks and tooling;

- Good practices related to coding and testing;

- Improvements to the code review process and release management;

- Focus on quality, especially during the release period.

In this way, we would like to have less of:

- Crashes and data loss bugs;

- Memory leaks and corruption;

- Glitches, corner cases and difficult-to-reproduce bugs;

- Fragility due to hidden assumptions and limitations;

- Legacy code;

- Code that nobody understands and maintains.

Automated testing

Inkscape's CI pipeline involves a number of unit, integration and end-to-end tests. However, we recognise that the overal test coverage is very poor as of late 2023. One of the main goals of this initiative is to track the test coverage and to work on increasing it.

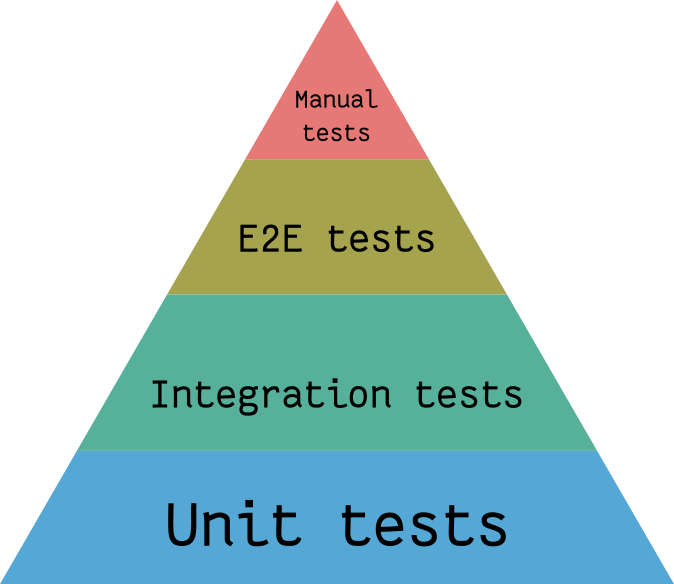

In the context of automated tests, we recall the famous Testing Pyramid (see right). It expresses the principle that tests at unit and component level should be the most prevalent ones, followed by a smaller number of integration tests, and topped off with a relatively lower number of end-to-end tests. Unfortunately, this is not the case today: we have too few unit tests. Manual testing should be limited to UX and usability aspects.

Why does Inkscape not have enough unit tests

- Historical reasons: feature development was prioritized and testing was never formally enforced.

- Work on tests and QA topics may be perceived as less "flashy" because it is less visible to the end user.

- Many new developments interact with and depend on legacy code which is hard to split off and mock. Thus, new code is hard to test.

- We don't have enough tooling such as automated test coverage reports or custom test fixtures to facilitate test development.

- Varied knowledge level of contributors, no expertise in testing.

What can be done about it

- Code review should emphasize the importance of adding unit tests for new functionality and regression tests for bug fixes.

- Quality and maintainability should be viewed as core aspects and as an important part of Inkscape's development.

- Legacy code must be managed carefully, see below.

- Test coverage reports should be introduced along with facilities that make it easy and pleasant to write tests.

- By increasing the number of tests, we can increase the knowledge and awareness of testing techniques in the community – learning from examples.

Dealing with legacy code

According to Michael Feathers, the author of the influential book "Working effectively with legacy code", legacy code is not defined as "old code", "code written in C" or in general "code that nobody maintains". Instead, legacy code is code that is not testable and not tested. We recognise that Inkscape contains a lot of such code.

The Vicious Cycle of Legacy Code

The fundamental problem with legacy code is that it's risky to change it because a small change in one place can have unpredictable and unintended consequences in other places. This has happened many times before and has led to severe bugs in Inkscape. In an ideal world, the existing code could be first enriched with "characterization tests" that capture its current behaviour, and tests could also be added for the new behaviour. In this way, we could assure that the development works as intended and doesn't break anything. But, according to our definition, legacy code is not testable so this cannot be done.

In this way, we are stuck in a vicious cycle: to make code testable, we need to refactor it in order to make it testable, but refactoring can break things badly unless we have tests, which unfortunately we don't and which cannot be added because the code is not testable.

To break out of this cycle, we need to start small, adding tests to simple and relatively independent pieces of functionality. It is helpful to identify the "seams", which usually correspond to interfaces, along which the program or a system can be natuarally divided into pieces. If these pieces are simple enough, they can be made testable. However, even with this approach, a fair bit of mocking may be required to simulate external dependencies, which makes it a tedious task.

Refactoring and development techniques to increase testability

A large portion of Inkscape's codebase is made up of code that's very literal, low-level and suffers from a lack of abstraction. Desigining good interfaces and abstraction is an important goal that should be kept in mind not only during major architectural updates, but also our everyday work. Whenever you work on Inkscape, ask yourself the question: "Does this change make the code I'm working on more or less testable?". Below are some concrete examples of techniques that can help increase testability.

Extract and mock/override

- Identify the piece of code that makes the functionality non-testable.

- Move this piece of code to a separate function(s) or a class. Moving code is safer than rewriting it, because the functional logic is kept unchanged.

- In tests, mock the class or function that the problematic code was moved to. This lets you test new functionality independently of the legacy code.

- If you need to extend the functionality provided by the non-testable code, try doing it without touching the untested function, but rather wrap around it or provide an override.

Decorator pattern

This design pattern helps extend a legacy class with new functionality. Instead of adding the new functionality directly to the non-testable class (which would make the new functionality non-testable as well), write a wrapper around the legacy class. In the decorator pattern, the decorator class holds an instance of the wrapped class and provides an interface that forwards member function calls to the wrapped object. However, some of the member functions of the wrapper can provide additional functionality or further work on the values obtained from the wrapped object, which makes it possible to add new behaviours. This new functionality can now be tested by mocking the wrapped class in tests.

Façade transformation

This technique helps when dealing with huge classes that hold too much data and that have too many member functions, making them complex, difficult to test, and increasing the coupling with other parts of the code. The façade transformation replaces the large class with a façade class which internally holds instances of several smaller classes. These smaller classes need to be designed by identifying the functionally cohesive pieces of the original large class and splitting them up. The member functions of the façade delegate to member function calls on these internal instances, sometimes more than one at a time. This transformation can be repeated if needed, until the classes are small enough that testing them is possible. The advantage of the façade transformation is that the API exposed by the large class is unchanged.

Guidelines on writing unit tests

- Create a separate test executable for each class or functional module.

- Write a separate test case for each of the tested behaviours.

- Write a doxygen-style comment above each test case, explaining very briefly what this case is testing.

- In each test case, follow the Arrange-Act-Assert principle:

- Arrange: create the data and objects needed to perform the tested operation;

- Act: run the tested operation;

- Assert: use

EXPECT_*andASSERT_*macros from GTest to check that the behaviour is as expected.

- Remember to test unexpected and corner cases, invalid input, fallback behaviours.

- If your test needs custom set-up and tear-down, use a custom test fixture and

TEST_Fmacros. - If your test needs to run on several cases parametrized by input data, consider using a

TEST_Pfixture.